Text by Jennifer F. Manongdo

Photo by Sarah Hazel Moces S. Pulumbarit

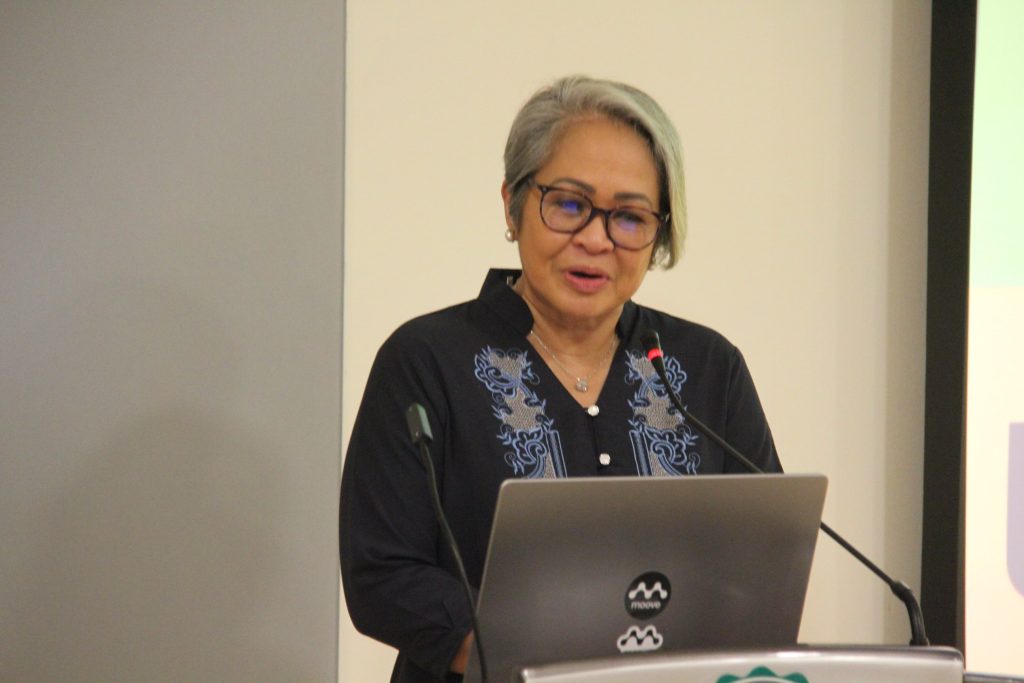

Dr. Mary-Anne Hartley invited medical experts at the University of the Philippines Manila to contribute to the development of Meditron.

“What are the risk factors for ‘bangungot’?” a member of the audience eagerly asked during an event on medical Artificial Intelligence (AI) led by Dr. Mary-Anne Hartley at Henry Sy, Sr. on Feb. 3, 2025. In just a few seconds, the screen flashed a comparison of the on-point responses of two AI-powered chatbots much to the amusement of medical specialists.

“Sana Tagalog,” the member of the audience added her remark reflecting a hint of curiosity at how the AI Large Language Models (LLM) would explain a question often asked by many.

Dr. Hartley, who leads the Laboratory of Intelligent Global Health Technologies (LiGHT) based at Biomedical Informatics and Data Science (BIDS) Yale School of Medicine, took the chance of inviting the physicians present at the event to “represent the Filipino community” and volunteer to train the emerging medical-focused open-source LLM – Meditron, to make it more inclusive and impactful in the medical community especially in low-resource settings.

“You can use your own expertise as medical doctors to make these models change to your expectations. This is what we’re trying to do. We’re trying to formalize this process so that we can get a community to make sure that we can validate and evaluate them and align them with our context and make models that suit our setting,” she told the audience.

Meditron is a suite of open-source large multimodal foundation models designed to assist with clinical decision-making and diagnosis. It was trained on high-quality medical data sources through Facebook parent company’s response to OpenAI’s ChatGPT and Google’s Gemini-Meta’s LLama 2.

Dr. Hartley co-leads a team of researchers from Switzerland’s technical university EPFL (École Polytechnique Fédérale de Lausanne) and Yale School of Medicine. In developing Meditron, the team is working closely with humanitarian organizations, including the International Committee of the Red Cross (ICRC), specifically to make the AI useful in low-resource medical environments such as in remote areas in South Africa, where she is based.

Released in 2024, Meditron has received an enormous amount of interest from medical professionals and workers from humanitarian organizations who volunteered to participate in the Meditron MOOVE (Massive Online Open Validation and Evaluation). This allows doctors to ask challenging questions to the medical AI and evaluate its answers giving developers important feedback on how to improve its upcoming models.

Challenges

But while AI is improving the medical landscape, it also poses a host of challenges to users.

A participant in the forum expressed concerns on the AI’s potential to raise quack doctors and lazy doctors.

“It’s more of a worry that I’m going to talk about. Number one is that I fear that sometime soon, we will have AI arbularyos (quack doctors),” the participant said. “Any student who knows how to access, he will be there and say, “Oh, you might have this, you might have that. So that is now a modern-day arbularyo.”

The same participant also fears that medical AI might encourage a generation of “lazy doctors” who rely more on technology than on experience and sound medical judgment.

Dr. Hartley reminded the crowd on the importance of validating data when applying AI in clinical settings. She said AI-driven medical programs should merely serve as guides and not as a replacement for critical thinking.

“You have to have a critical understanding of the answer first, because there is a risk of hallucination,” Dr. Hartley warned. “The AI might not always be correct, and we must be cautious of that risk.” This caution reflects the need for a culture of constant vigilance to ensure that AI-driven solutions serve as guides, not as replacements for human judgment.

Technology giant IBM says AI hallucination occurs when “algorithms produce outputs that are not based on training data, are incorrectly decoded by the transformer or do not follow any identifiable pattern. In other words, it ‘hallucinates’ the response.”#